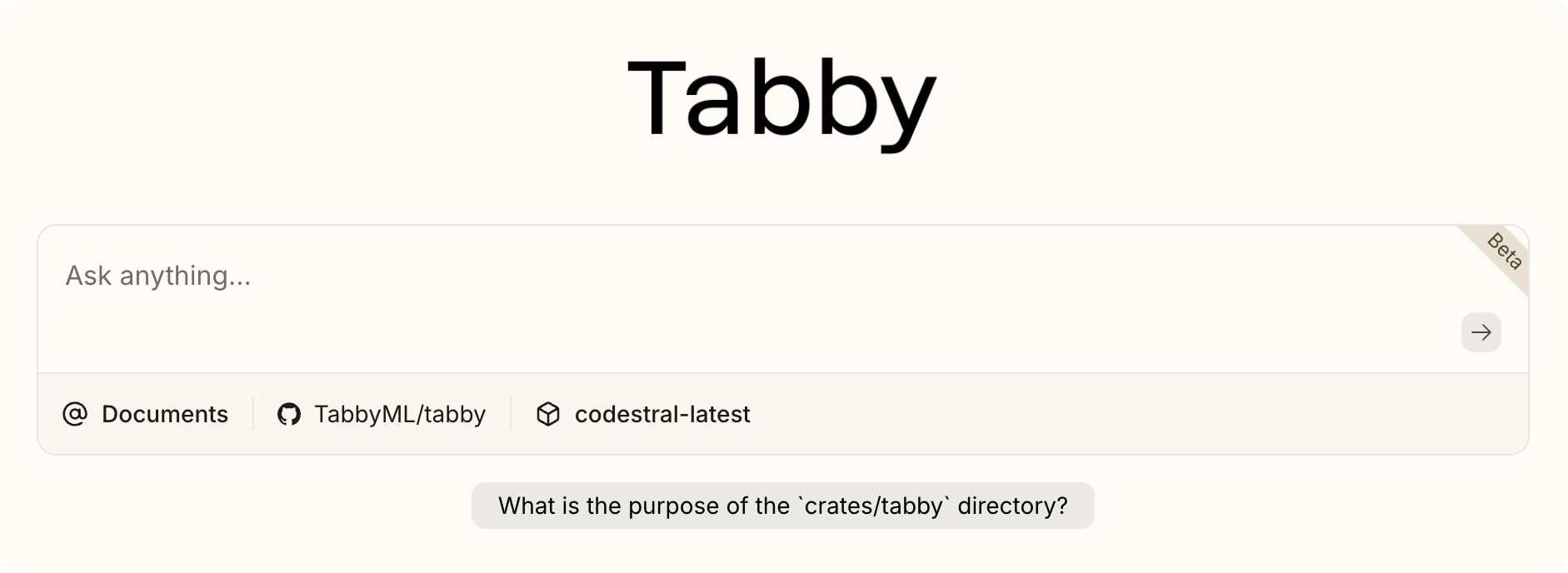

Answer Engine

Tabby provides an Answer Engine on the homepage,

which can utilize the chat-model LLM and related context to answer user questions.

Contexts

The Answer Engine can query the following contexts to provide more accurate answers.

For more information about contexts, please refer to the Context Provider.

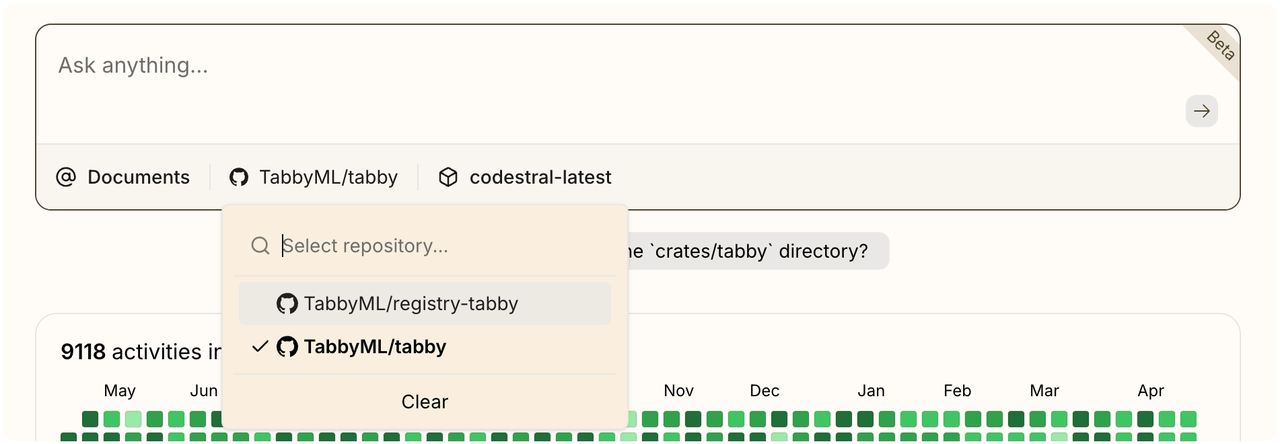

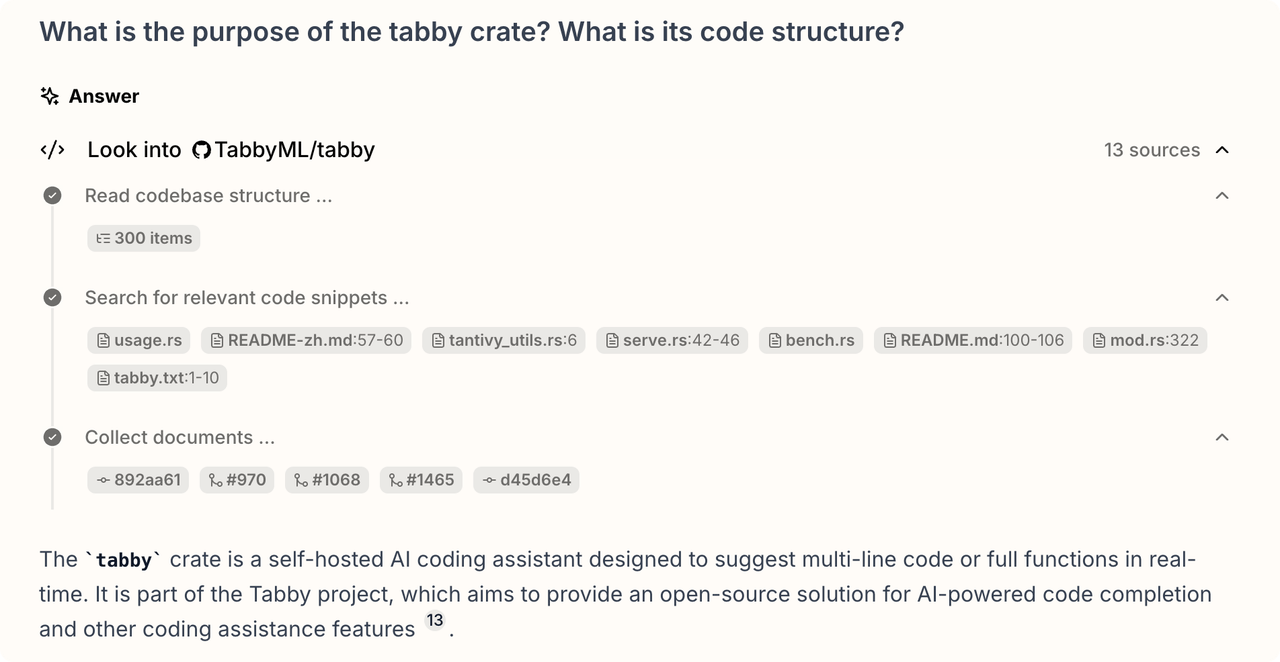

Source Code Repositories

The source code context is used to connect Tabby with a source code repository from Git, GitHub, GitLab, etc. Tabby fetches the data from the repository, and stores it in the index.

When users select a repository and ask a question, the Answer Engine retrieves relevant code and documents from the index to provide contextually accurate responses.

Developer Documentation

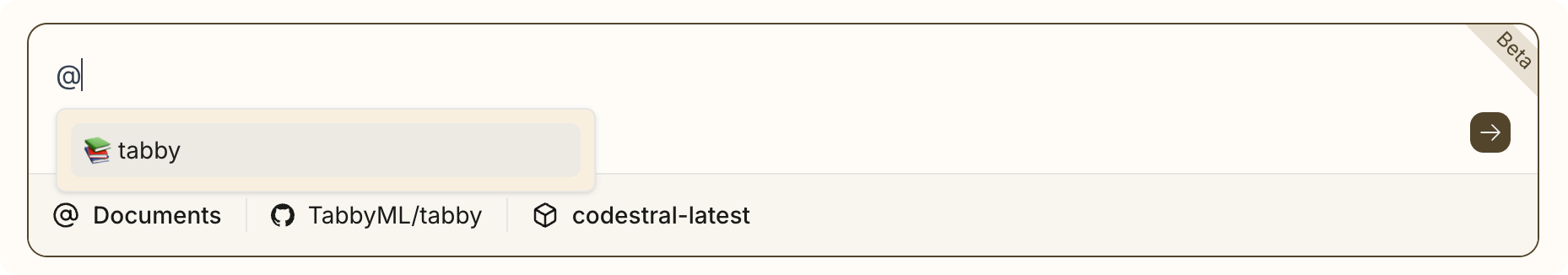

When developer documentation contexts are set, users can simply type @ to select documents they wish to include. Alternatively, users can click the icons below the chat box to select contexts directly. Tabby will then include these documents when interacting with LLMs.

When users select developer documentation and ask a question, the Answer Engine retrieves relevant documents from the index to provide contextually accurate responses.

Thread

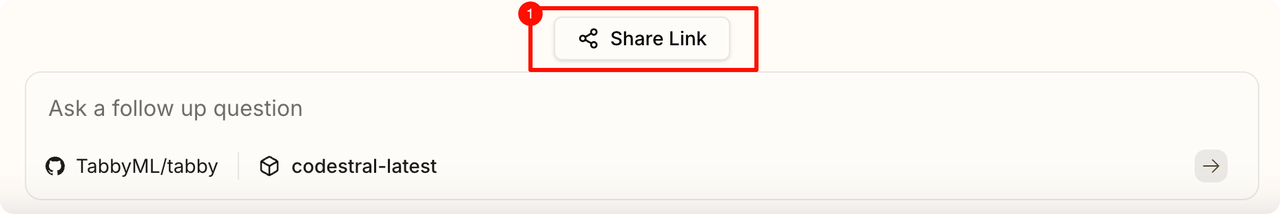

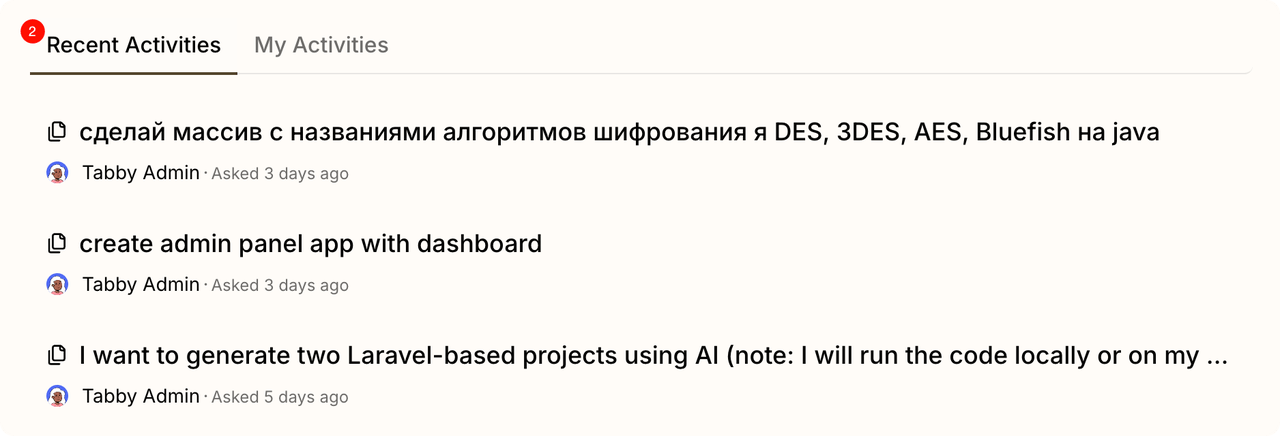

All threads created by a user appear in their My Activities tab. By default, new threads are temporary and automatically deleted after a week. The thread becomes persisted only when the creator clicks the Share Link button., and it'll become visible to all team members under Recent Activities tab.

Thread Permissions

- Write access: Creator only (edit / delete / ask follow-ups)

- Read access

- Ephemeral threads: Creator & server admins

- Persistent threads: All team members

Web Search

The Web Search is currently on Beta.

Please note that the web search is a special context that can only be enabled by providing an environment variable SERPER_API_KEY. Once enabled, the Answer Engine can search the web for more relevant answers by using @Web.